Company Overview

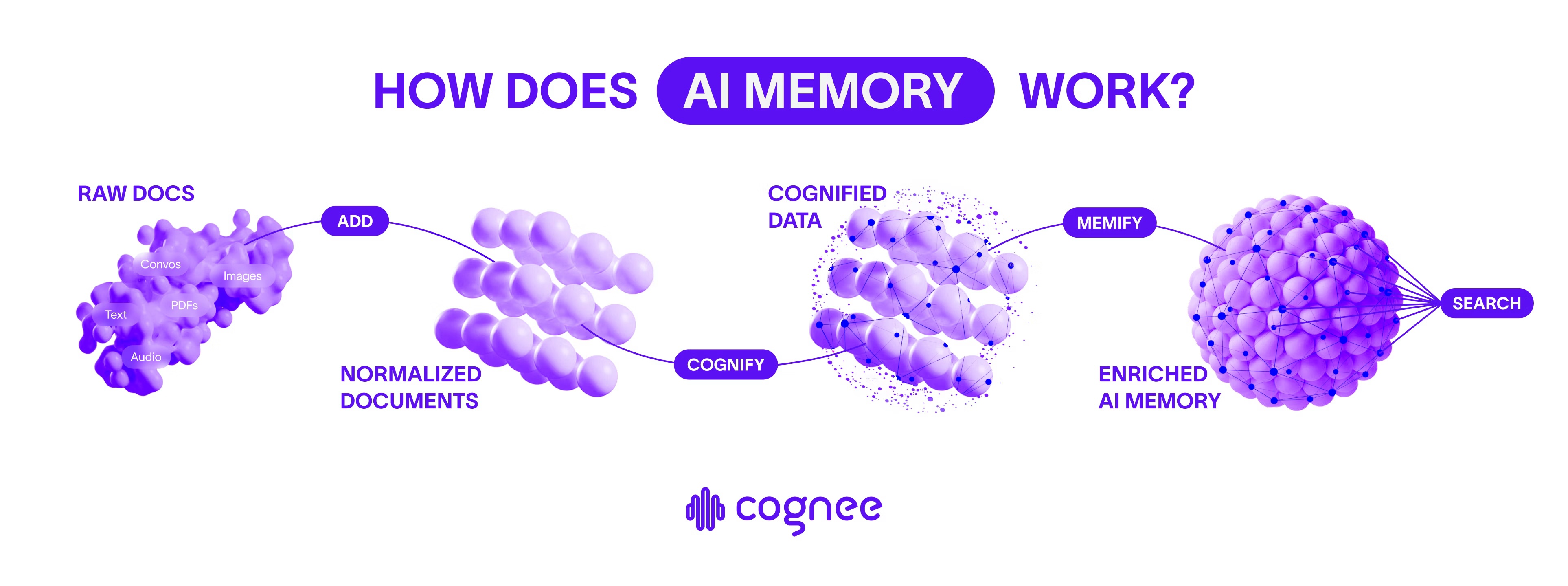

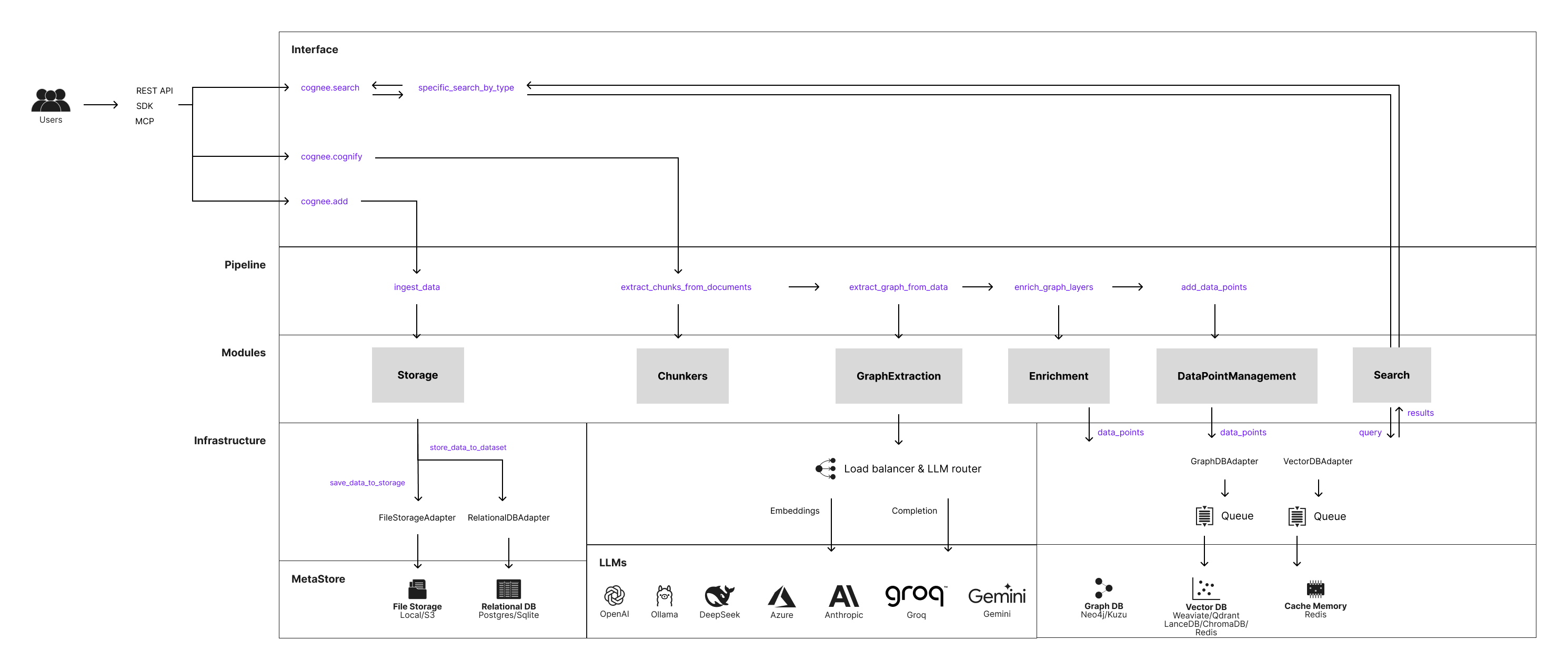

Cognee helps agents retrieve, reason, and remember with context that has structure and time. It ingests sources such as files, APIs, and databases, then chunks and embeds content , enriches entities and relations, and builds a queryable knowledge graph .

Applications built on Cognee query both the graph and the vectors to answer multi-step questions with clearer provenance. This platform is designed for teams building autonomous agents, copilots, and search in knowledge-heavy domains.

Figure 1: Query and transform your LLM context anywhere using cognee’s memory engine.

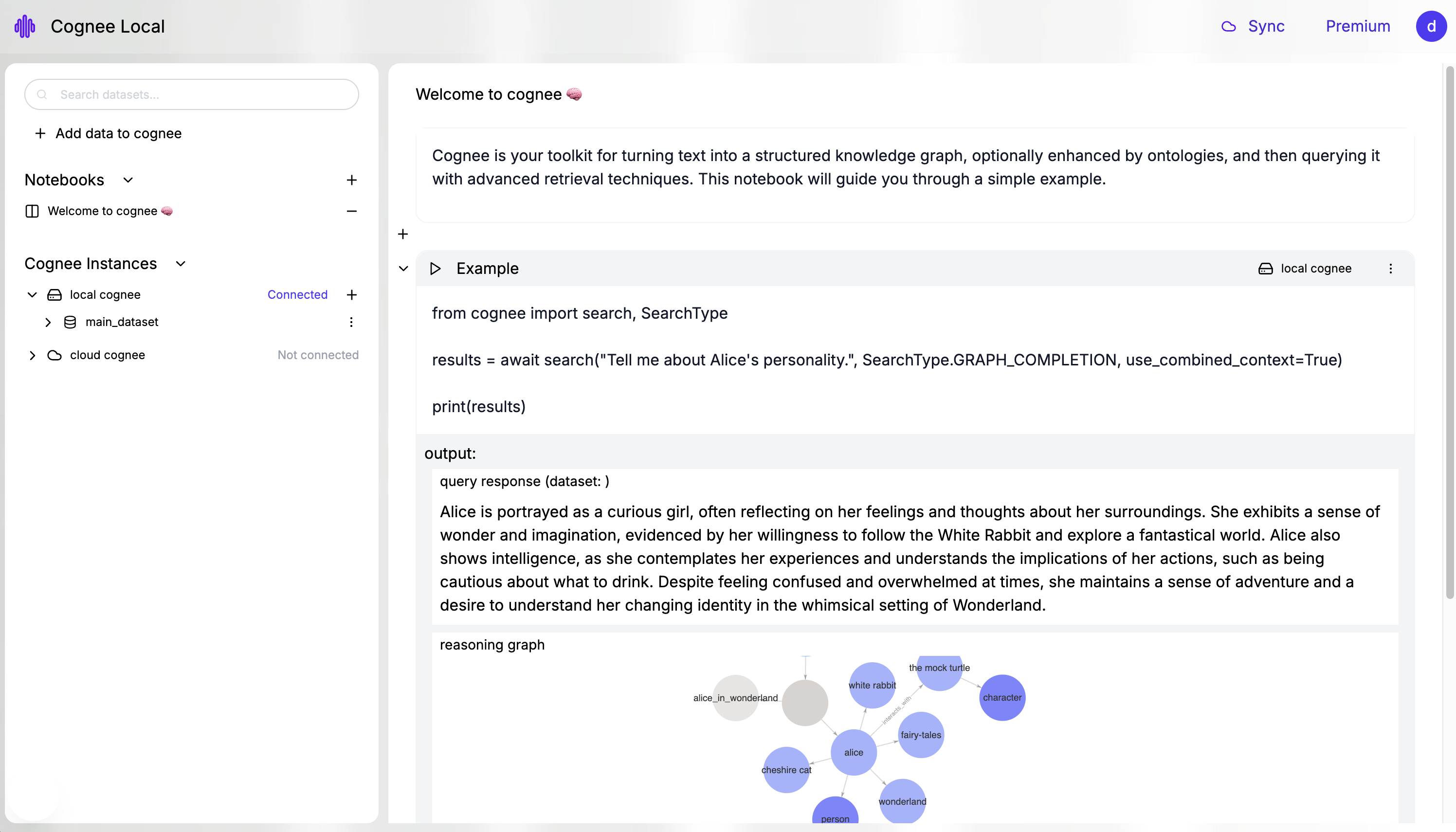

The Cognee interface enables developers to build and query AI memory systems with both graph and vector search capabilities.

The Challenge

Agent teams often struggle with stateless context and homegrown RAG stacks. They juggle a graph here, a vector store there, and ad hoc rules in between. This raises reliability risks and slows iteration. Cognee needed a vector store that matched its isolation model, supported per-workspace development, and stayed simple for day-to-day work.

The Isolation Problem

Cognee’s isolation model is the way it separates memory stores so that every developer, user, or test instance gets its own fully independent workspace. Instead of sharing a single vector database or graph across contexts, Cognee spins up a dedicated set of backends—most notably a LanceDB store —for each workspace.

This approach solves a critical problem in AI development: when multiple developers work on the same project, or when running tests in parallel, shared state can lead to unpredictable behavior, data corruption, and debugging nightmares. Traditional vector databases require complex orchestration to achieve this level of isolation.

LanceDB as a Solution

Cognee chose LanceDB because it fits the way engineers actually build and test memory systems. Since LanceDB is file based , Cognee can spin up a separate store per test, per user, and per workspace.

Why File-Based Storage Matters

Developers run experiments in parallel without shared state, and they avoid the overhead of managing a separate vector database service for every sandbox. This approach shortens pull-request cycles, improves demo reliability, and makes multi-tenant development more predictable.

Unlike traditional vector databases that require running servers, LanceDB’s file-based approach means each workspace gets its own directory on disk. This eliminates the complexity of managing database connections, ports, and service dependencies during development and testing.

How the Pieces Fit

Cognee delivers a durable memory layer for AI agents by unifying a knowledge graph with high-performance vector search. It ingests files, APIs, and databases, then applies an Extract–Cognify–Load model to chunk content, enrich entities and relationships, add temporal context, and write both graph structures and embeddings for retrieval.

LanceDB is the default vector database in this stack, which keeps embeddings and payloads close to each other and removes extra orchestration. Because LanceDB is file based , Cognee can provision a clean store per user, per workspace, and per test, so teams iterate quickly without shared state or heavy infrastructure.

From Development to Production

This architecture scales from a laptop to production without changing the mental model. Developers can start locally with Cognee ’s UI, build and query memory against LanceDB , and validate behavior with isolated sandboxes.

The Cognee architecture shows how data flows from sources through the ECL pipeline to both graph and vector storage backends.

When it is time to go live, the same project can move to Cognee’s hosted service - cogwit , which manages Kuzu for graph, LanceDB for vectors, and PostgreSQL for metadata, adding governance and autoscaling as needed. With Cognee UI, teams can switch between local and cloud (cogwit) environments easily.

Cognee ’s Memify pipeline keeps memory fresh after deployment by cleaning stale nodes, strengthening associations, and reweighting important facts, which improves retrieval quality without full rebuilds.

The result is a simpler and more reliable path to agent memory than piecing together separate document stores, vector databases, and graph engines.

Local Development Workflow

This diagram shows sources flowing into Cognee on a developer’s machine, with each workspace writing to its own LanceDB store. The benefit is clean isolation: tests and demos never collide, sandboxes are easy to create and discard, and engineers iterate faster without running a separate vector database service.

Each workspace operates independently, with its own:

- Vector embeddings stored in LanceDB

- Knowledge graph structure

- Metadata and temporal context

- Query and retrieval capabilities

Figure 2: Local-first memory with per-workspace isolation

flowchart LR

%% Figure 1: Local-first memory with per-workspace isolation

subgraph S["Sources"]

s1[Files]

s2[APIs]

end

subgraph C["Cognee (local)"]

c1[Ingest]

c2[Cognify]

c3[Load]

end

subgraph L["LanceDB (per workspace)"]

l1[/.../workspace-a/vectors/]

l2[/.../workspace-b/vectors/]

end

Agent[Agent or App]

s1 --> c1

s2 --> c1

c1 --> c2

c2 --> c3

%% Write vectors per isolated workspace

c3 --> l1

c3 --> l2

%% Query path

Agent -->|query| c2

c2 -->|read/write| l1

l1 -->|results| c2

c2 -->|answers| Agent

User Interface

The Cognee Local interface provides a workspace for building and exploring AI memory directly on a developer’s machine.

From the sidebar, users can connect to local or cloud instances, manage datasets, and organize work into notebooks. Each notebook offers an interactive environment for running code, querying data, and visualizing results.

The Cognee Local UI provides an intuitive interface for building and querying AI memory systems with both graph and vector search capabilities.

The UI supports importing data from multiple sources, executing searches with graph or vector retrieval, and inspecting both natural-language answers and reasoning graphs. It is designed to make experimentation straightforward—users can ingest data, build structured memory, test retrieval strategies, and see how the system interprets and connects entities, all within a single environment. This local-first approach makes it easy to prototype, debug, and validate memory pipelines before moving them to a hosted service.

Memory Processing Pipeline

Cognee converts unstructured and structured inputs into an evolving knowledge graph, then couples it with embeddings for retrieval. The result is a memory that improves over time and supports graph-aware reasoning.

This pipeline processes data through three distinct phases:

- Extract: Pull data from various sources (files, APIs, databases)

- Cognify: Transform raw data into structured knowledge with embeddings

- Load: Store both graph and vector data for efficient retrieval

Figure 2: Cognee memory pipeline

flowchart LR

%% Figure 2: Extract -> Cognify -> Load

E[Extract]

subgraph CG["Cognify"]

cg1[Chunk]

cg2[Embed]

cg3[Build knowledge graph]

cg4[Entity and relation enrichment]

cg5[Temporal tagging]

end

subgraph LD["Load"]

ld1[Write graph]

ld2[Write vectors]

subgraph Backends

b1[(Kuzu)]

b2[(LanceDB)]

end

svc[Serve graph and vector search]

end

E --> cg1 --> cg2 --> cg3 --> cg4 --> cg5

cg5 --> ld1

cg5 --> ld2

ld1 --> b1

ld2 --> b2

b1 --> svc

b2 --> svc

Here the Extract, Cognify, and Load stages turn raw inputs into a knowledge graph and embeddings , then serve both graph and vector search from Kuzu and LanceDB . Users gain higher quality retrieval with provenance and time context, simpler pipelines because payloads and vectors stay aligned, and quicker updates as memory evolves.

Production Deployment Path

Teams begin locally and promote the same model to Cognee’s hosted service - cogwit - when production requirements such as scale and governance come into play. This seamless transition is possible because the same data structures and APIs work in both local and hosted environments.

Learn more about LanceDB and explore Cognee .

Figure 3: Hosted path with Cognee’s production service

flowchart LR

%% Figure 3: Local -> Cogwit hosted path

LUI[Local Cognee UI]

subgraph CW["Cognee Hosted Service"]

APIs[Production memory APIs]

note1([Autoscaling • Governance • Operations])

subgraph Backends

K[(Kuzu)]

V[(LanceDB)]

P[(PostgreSQL)]

end

end

Teams[Teams or Agents]

LUI -->|push project| CW

APIs --> K

APIs --> V

APIs --> P

Teams <-->|query| APIs

This figure shows a smooth promotion from the local UI to cogwit - Cognee’s hosted service, which manages Kuzu , LanceDB , and PostgreSQL behind production APIs. Teams keep the same model while adding autoscaling, governance, and operational controls, so moving from prototype to production is low risk and does not require a redesign.

Why LanceDB fit

LanceDB aligns with Cognee ’s isolation model. Every test and every user workspace can have a clean database that is trivial to create and remove. This reduces CI friction, keeps parallel runs safe, and makes onboarding faster. When teams do need more control, LanceDB provides modern indexing options such as IVF-PQ and HNSW-style graphs , which let engineers tune recall, latency, and footprint for different workloads.

Technical Advantages

Beyond isolation, LanceDB offers several technical advantages that make it ideal for Cognee’s use case:

- Unified Storage: Original data and embeddings stored together eliminate synchronization issues

- Zero-Copy Evolution: Schema changes don’t require data duplication

- Hybrid Search: Native support for both vector search and full-text search

- Performance: Optimized for both interactive queries and batch processing

- Deployment Flexibility: Works locally, in containers, or in cloud environments

Results

These results show that the joint Cognee–LanceDB approach is not just an internal efficiency gain but a direct advantage for end users. Developers get faster iteration and clearer insights during prototyping, while decision makers gain confidence that the memory layer will scale with governance and operational controls when moved to production. This combination reduces both time-to-market and long-term maintenance costs.

Development Velocity Improvements

“LanceDB gives us effortless, truly isolated vector stores per user and per test, which keeps our memory engine simple to operate and fast to iterate.” - Vasilije Markovic, Cognee CEO

Using LanceDB has accelerated Cognee’s development cycle and improved reliability. File-based isolation allows each test, workspace, or demo to run on its own vector store, enabling faster CI, cleaner multi-tenant setups, and more predictable performance. Onboarding is simpler since developers can start locally without provisioning extra infrastructure.

Product Quality Gains

At the product level, combining Cognee’s knowledge graph with LanceDB’s vector search has improved retrieval accuracy, particularly on multi-hop reasoning tasks like HotPotQA . The Memify pipeline further boosts relevance by refreshing memory without full rebuilds. Most importantly, the same local setup can scale seamlessly to cogwit - Cognee’s hosted service, giving teams a direct path from prototype to production without redesign.

Key benefits include:

- Faster development cycles due to isolated workspaces

- Reduced environment setup time with file-based storage

- Improved multi-hop reasoning accuracy with graph-aware retrieval

- Seamless deployments when scaling to production

Recent product releases at Cognee

Cognee has shipped a focused set of updates that make the memory layer smarter and easier to operate. A new local UI streamlines onboarding. The Memify post-processing pipeline keeps the knowledge graph fresh by cleaning stale nodes, adding associations, and reweighting important memories without a full rebuild.

Memify Pipeline in Action

The Memify pipeline represents a significant advancement in AI memory management, enabling continuous improvement without costly rebuilds.

Here is a typical example of the Memify pipeline for post-processing knowledge graphs:

import asyncio

import cognee

from cognee import SearchType

async def main():

# 1) Add two short chats and build a graph

await cognee.add([

"We follow PEP8. Add type hints and docstrings.",

"Releases should not be on Friday. Susan must review PRs.",

], dataset_name="rules_demo")

await cognee.cognify(datasets=["rules_demo"]) # builds graph

# 2) Enrich the graph (uses default memify tasks)

await cognee.memify(dataset="rules_demo")

# 3) Query the new coding rules

rules = await cognee.search(

query_type=SearchType.CODING_RULES,

query_text="List coding rules",

node_name=["coding_agent_rules"],

)

print("Rules:", rules)

asyncio.run(main())Self-improving memory logic and time awareness help agents ground responses in what has changed and what still matters. A private preview of graph embeddings explores tighter coupling between structure and retrieval. Cognee also reports strong results on graph-aware evaluation, including high correctness on multi-hop tasks such as HotPotQA . Cognee’s hosted service - cogwit opens this experience to teams that want managed backends from day one.

What’s Next

Looking ahead, Cognee is focusing on:

- Enhanced graph embedding capabilities for better structure-retrieval coupling

- Improved time-aware reasoning for dynamic knowledge updates

- Expanded integration options for enterprise workflows

- Advanced analytics and monitoring for production deployments

Why this joint solution is better

Many stacks bolt a vector database to an unrelated document store and a separate graph system. That adds orchestration cost and creates brittle context. By storing payloads and embeddings together, LanceDB reduces integration points and improves locality. Cognee builds on this to deliver graph-aware retrieval that performs well on multi-step tasks, while the Memify pipeline improves memory quality after deployment rather than forcing rebuilds. The combination yields higher answer quality with less operational churn, and it does so with a simple path from a laptop to a governed production environment.

The Integration Advantage

Traditional approaches require complex orchestration between multiple systems:

- Vector database for similarity search

- Graph database for relationships

- Document store for original content

- Custom glue code to keep everything synchronized

Cognee + LanceDB eliminates this complexity by providing a unified platform that handles all these concerns natively.

Getting Started is Simple

Developers can start local, install Cognee , and build a memory over files and APIs in minutes. LanceDB persists embeddings next to the data, so indexing and queries remain fast. When the prototype is ready, they can push to cogwit - Cognee’s hosted service and inherit managed Kuzu , LanceDB , and PostgreSQL without changing how they think about the system. Technical decision makers get a simpler architecture, a clear upgrade path, and governance features when needed.

The Business Case

For organizations, this means:

- Faster time-to-market: No complex infrastructure setup required

- Lower operational overhead: Unified platform reduces maintenance burden

- Better developer experience: Local development matches production environment

- Reduced vendor lock-in: Open source components provide flexibility

- Scalable architecture: Grows from prototype to enterprise without redesign

Getting started

- Install Cognee , build a small memory with the local LanceDB store, and enable Memify to see how post-processing improves relevance.

- When production SLAs and compliance become priorities, promote the same setup to cogwit - Cognee’s hosted service and gain managed scale and operations.

Next Steps

Ready to build AI memory systems that scale? Start with the Cognee documentation and explore how LanceDB can power your next AI application. The combination of local development simplicity and production scalability makes this stack ideal for teams building the future of AI.