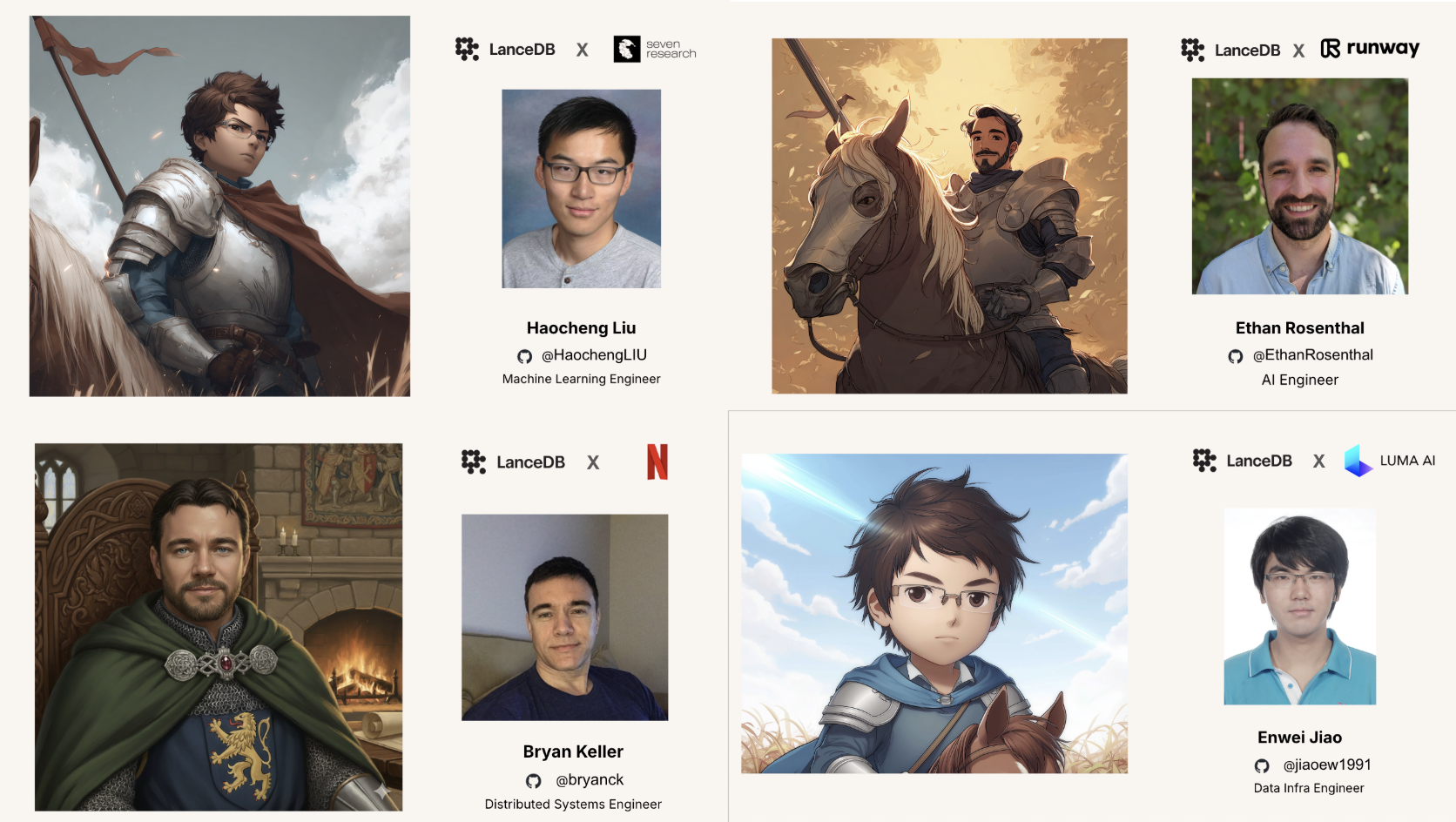

🛡️ Meet the Newly Knighted Lancelot

In May, we announced 3 new members to join our

Lancelot Round Table

. It is time again for us to welcome four new noble members to the Roundtable from Netflix, RunwayML, Luma AI, and Seven Research! A huge thank you to each of them for their continued support and contributions to lance and lancedb. For the full list of all Lancelot, please check our

github wiki page

.

⚔️ 🐎 Hail to the Knights of the Lancelot Roundtable!

Building Semantic Video Recommendations with TwelveLabs and LanceDB

Tutorial: a semantic video recommendation engine powered by TwelveLabs , LanceDB , and Geneva .

- TwelveLabs provides multimodal embeddings that encode the narrative, mood, and actions in a video, going far beyond keyword matching.

- LanceDB stores these embeddings together with metadata and supports fast vector search through a developer-friendly Python API.

- Geneva , built on LanceDB and powered by Ray , scales the entire pipeline seamlessly from a single laptop to a large distributed cluster—without changing your code.

Productionalize AI Workloads with Lance Namespace, LanceDB, and Ray

Most of the work in AI isn’t in the model. It’s in the data pipeline. Organizing billions of rows of data for indexing and retrieval isn’t easy. This is where Ray and LanceDB come together:

- Ray takes care of the heavy lifting: distribute data ingestion, embedding generation, and feature engineering across clusters.

- LanceDB makes the results instantly queryable: fast vector search, hybrid search, and analytics at production scale.

🎤 Watch the Recordings!

📚 Good Reads

Setup Real-Time Multimodal AI Analytics with Apache Fluss (incubating) and Lance

Real-time multimodal AI is finally practical! With Apache Fluss (Incubating) as the streaming storage layer and Lance as the AI-optimized lakehouse, you can stream data in right now, tier it automatically, and query it instantly for RAG, analytics, or training.

💼 Case Study

How Cognee Builds AI Memory Layers with LanceDB

“LanceDB gives us effortless, truly isolated vector stores per user and per test, which keeps our memory engine simple to operate and fast to iterate.”

-Vasilije Markovic, Cognee CEO

Distributed Training with LanceDB and Tigris | Tigris Object Storage

Training multimodal datasets at scale is hard. They grow fast, they don’t fit on a single disk, and traditional workarounds (like network filesystems) introduce complexity and cost. But what if you could treat storage as infinite and let your infrastructure handle the hard parts for you?

That’s exactly what Xe iaso shows in this new guide: how LanceDB and Tigris Data make large-scale distributed training simple. No more worrying about local storage limits, manual sharding, or egress fees—just seamless scaling from research to production.

📊 LanceDB Enterprise Product News

| Feature | Description |

|---|---|

| RabitQ quantization for vector indices | RabitQ is an alternative to PQ that is more memory- and compute-efficient. See https://arxiv.org/abs/2405.12497 for more details. |

| Significantly-reduced latencies for full-text search | Full-text search P95 latencies are now reduced by up to 32.3%. Cold start P95 latencies are now reduced by up to 18.7%. |

| Scalar indices for JSON columns with type-aware indexing | LanceDB now has the ability to create JSON scalar indices that are type-aware, instead of assuming all extracts are strings. This should resolve the class of issues that are a result of no type awareness. |

| KMeans algorithm runs significantly faster | We improved the implementation of our KMeans algorithm to run ~30x faster than before, with even more gains at large k. This results in IVF-based vector indices being built more quickly. |

🫶 Community contributions

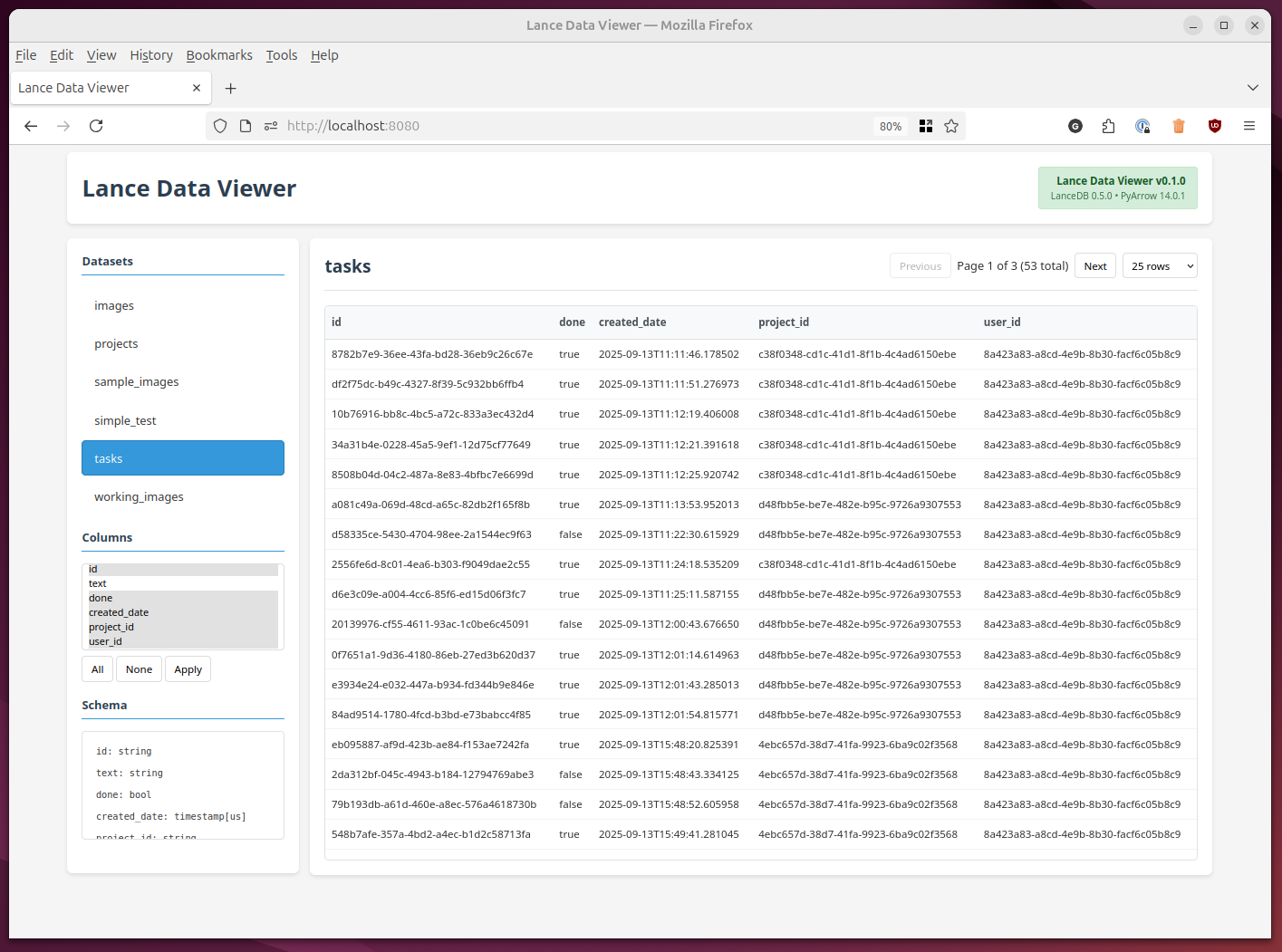

Introducing Lance Data Viewer: A Simple Way to Explore Lance Tables

When exploring LanceDB for his own projects, Gordon realized there was no simple way to peek inside Lance tables, something many of us take for granted with relational databases. Instead of waiting, he built it. Lance Data Viewer, an open-source, containerized web UI for browsing Lance datasets:

A heartfelt thank you to our community contributors of lance and lancedb this past month: @majin1102 @wayneli-vt @wojiaodoubao @chenghao-guo @huyuanfeng2018 @ColdL @jtuglu1 @xloya @yanghua @steFaiz @morales-t-netflix @felix-schultz @timsaucer @HaochengLIU @beinan @fangbo @jaystarshot @lorinlee @manhld0206 @naaa760 @vlovich @pimdh

🌟 Open Source Releases Spotlight

| LanceDB | 0.22.1 | Shallow clone support, mTLS, custom request header support for remote database, Mean reciprocal rank reranker support |

| Lance | 0.37 | New table metadata and incremental metadata update support, S3 throughput optimization, distributed compaction in Java, FTS UDTF support in DataFusion, scalar and vector index support for nested struct fields |

| 0.36 | Bloom filter index support, scalar index and compaction APIs in Java | |

| 0.35 | New lance-tools CLI, JSONB read write support, JSON UDFs, scalar index for JSON, FTS index support for contains_token function, Apache OpenDAL support | |

| Lance Namespace | 0.0.15-0.0.16 | Apache Gravitino, Apache Polaris, Google Dataproc support, simplified basic operations for REST namespace implementers |

| Lance Ray | 0.0.6 | Distributed build for FTS index, btree index, fragment level operations for customized write workflows |

| Lance Spark | 0.0.12-0.0.13 | COUNT(*) support, BINARY type with Lance Blob encoding read and write support |