Engineering /

LanceDB / March 20, 2024

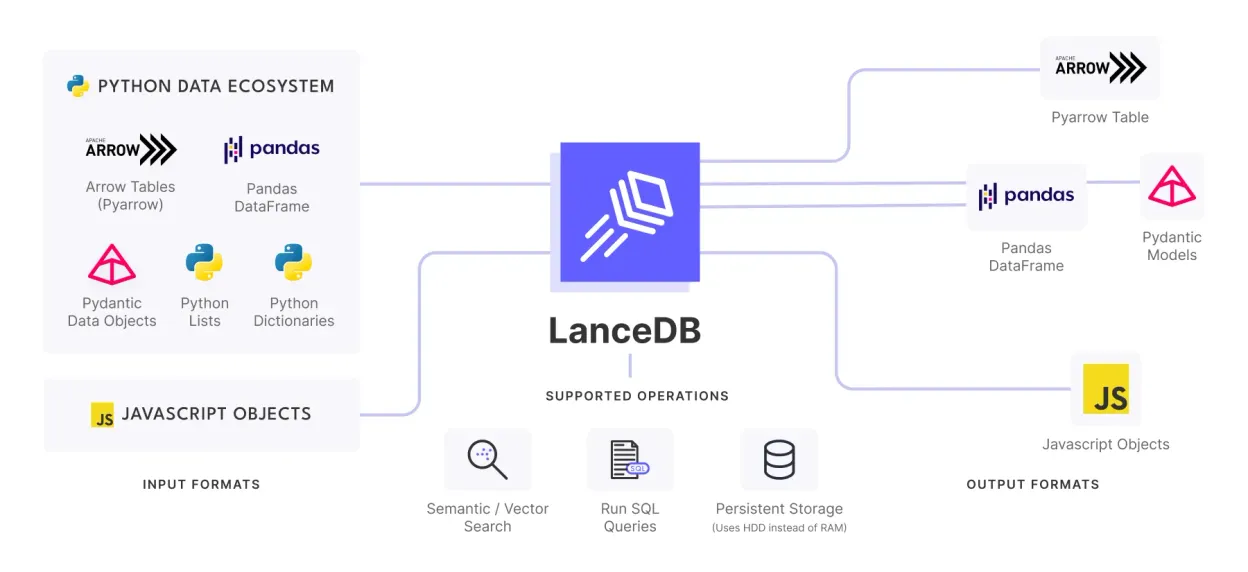

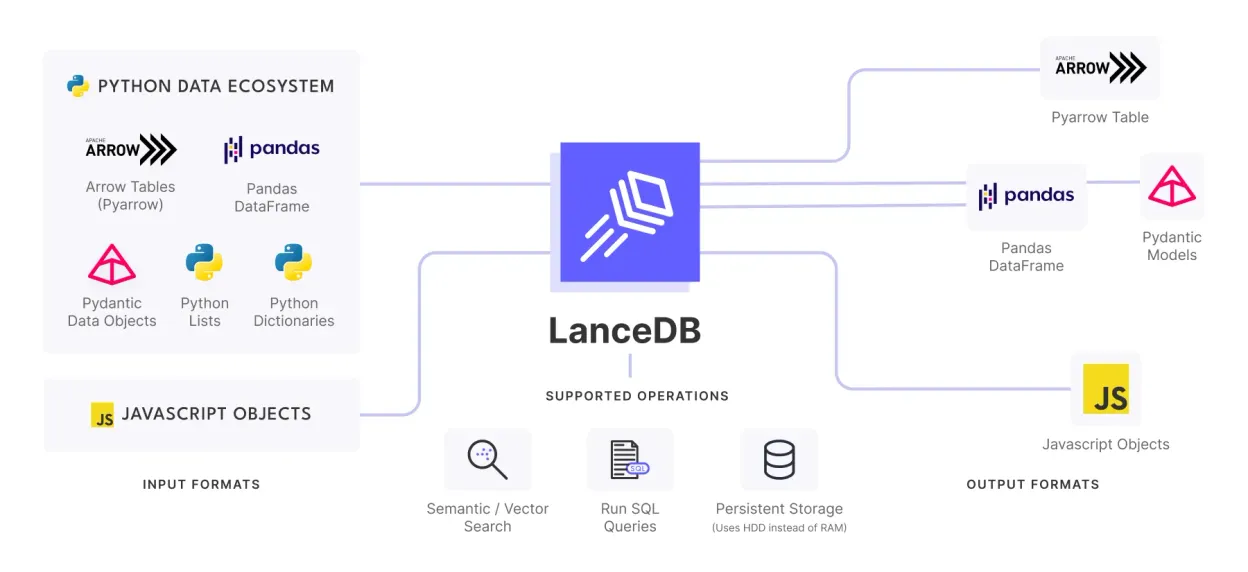

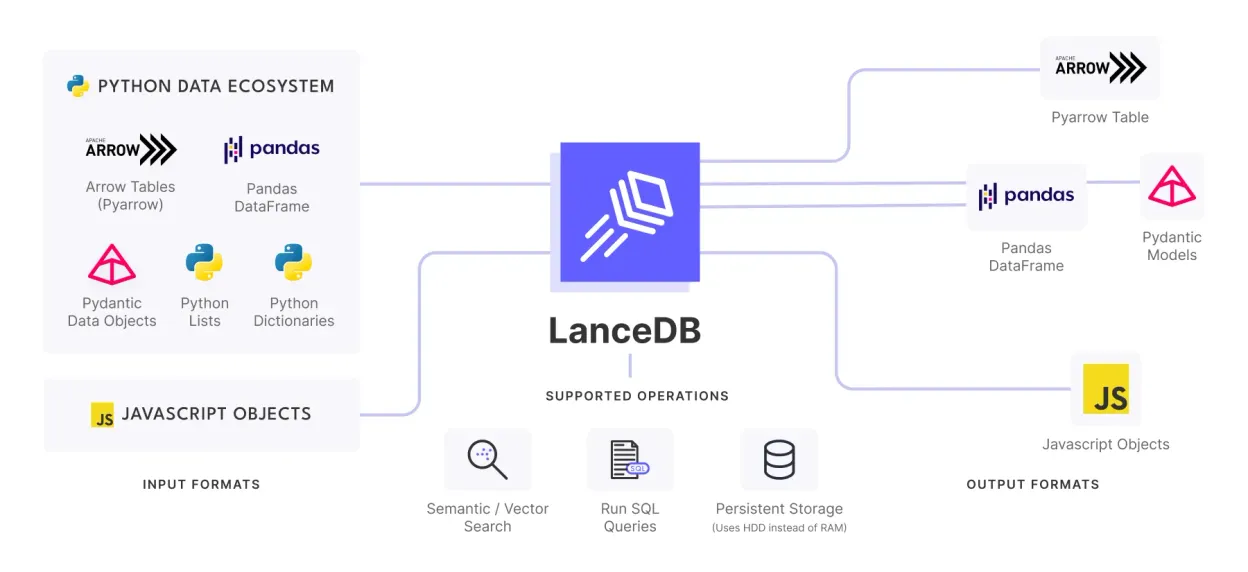

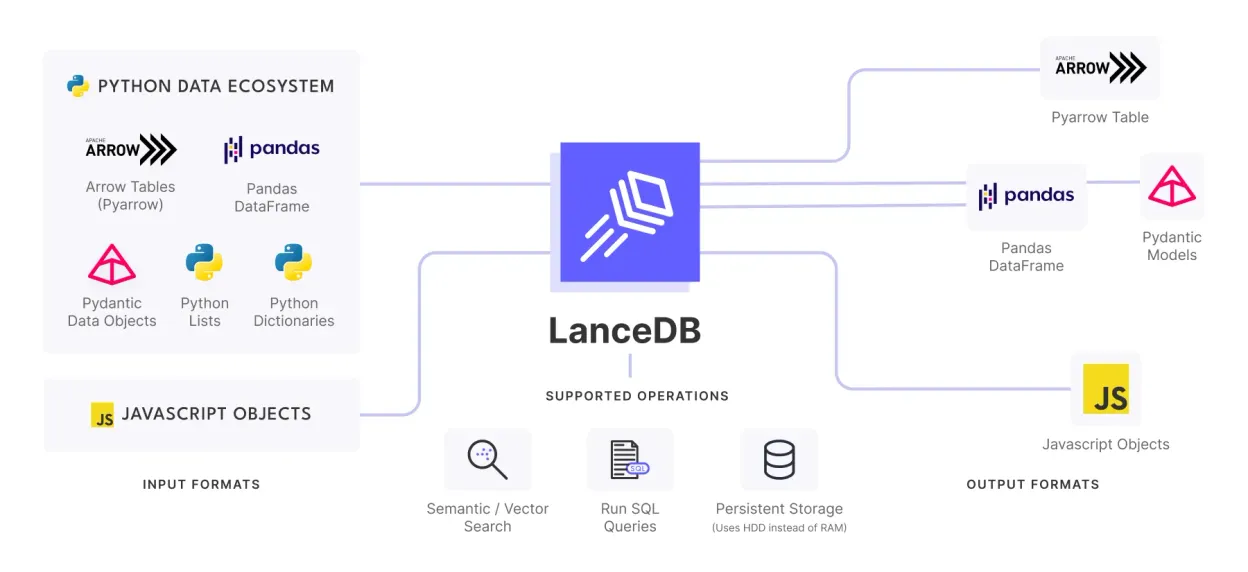

Build a multimodal fashion search engine with LanceDB and CLIP embeddings. Follow a step‑by‑step workflow to register embeddings, create the table, query by text or image, and ship a Streamlit UI.

Engineering /

LanceDB / March 8, 2024

See about custom datasets for efficient llm training using lance. Get practical steps, examples, and best practices you can use now.

Engineering /

LanceDB / March 4, 2024

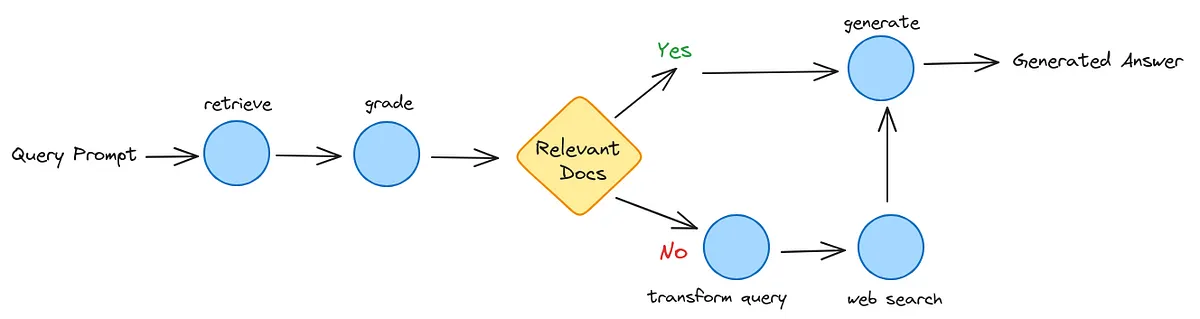

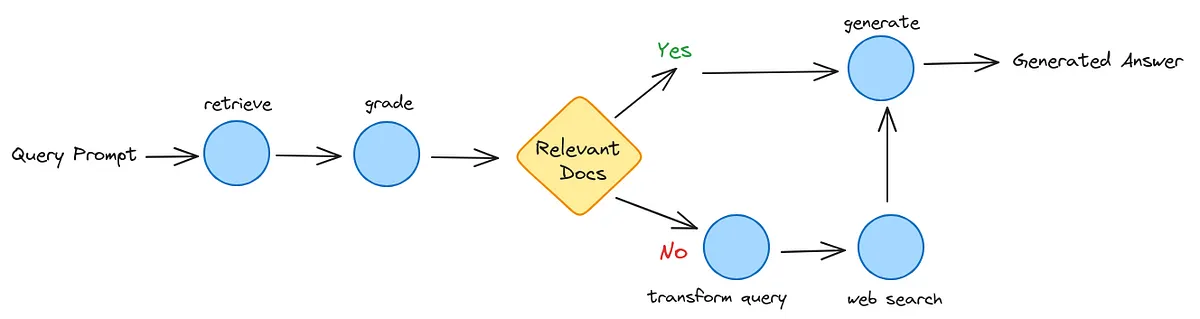

Even though text-generation models are good at generating content, they sometimes need to improve in returning facts. This happens because of the way they are trained.

Engineering /

LanceDB / February 19, 2024

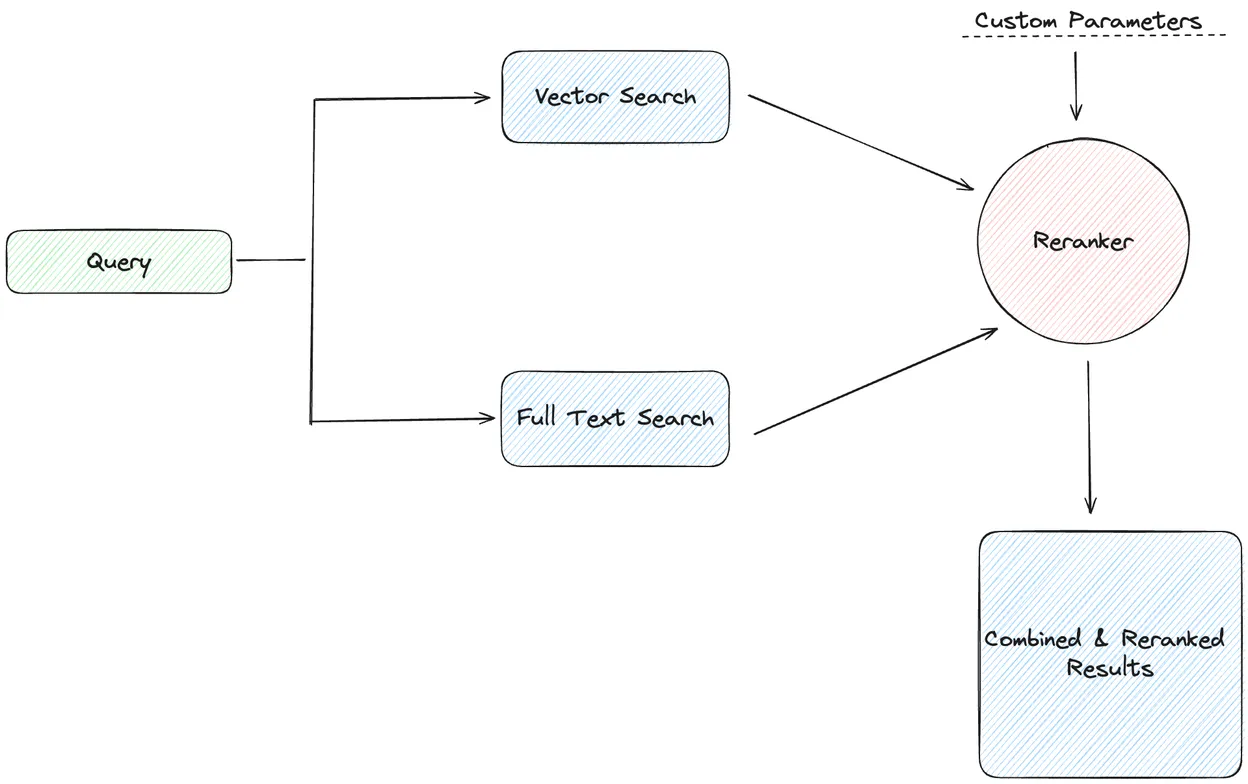

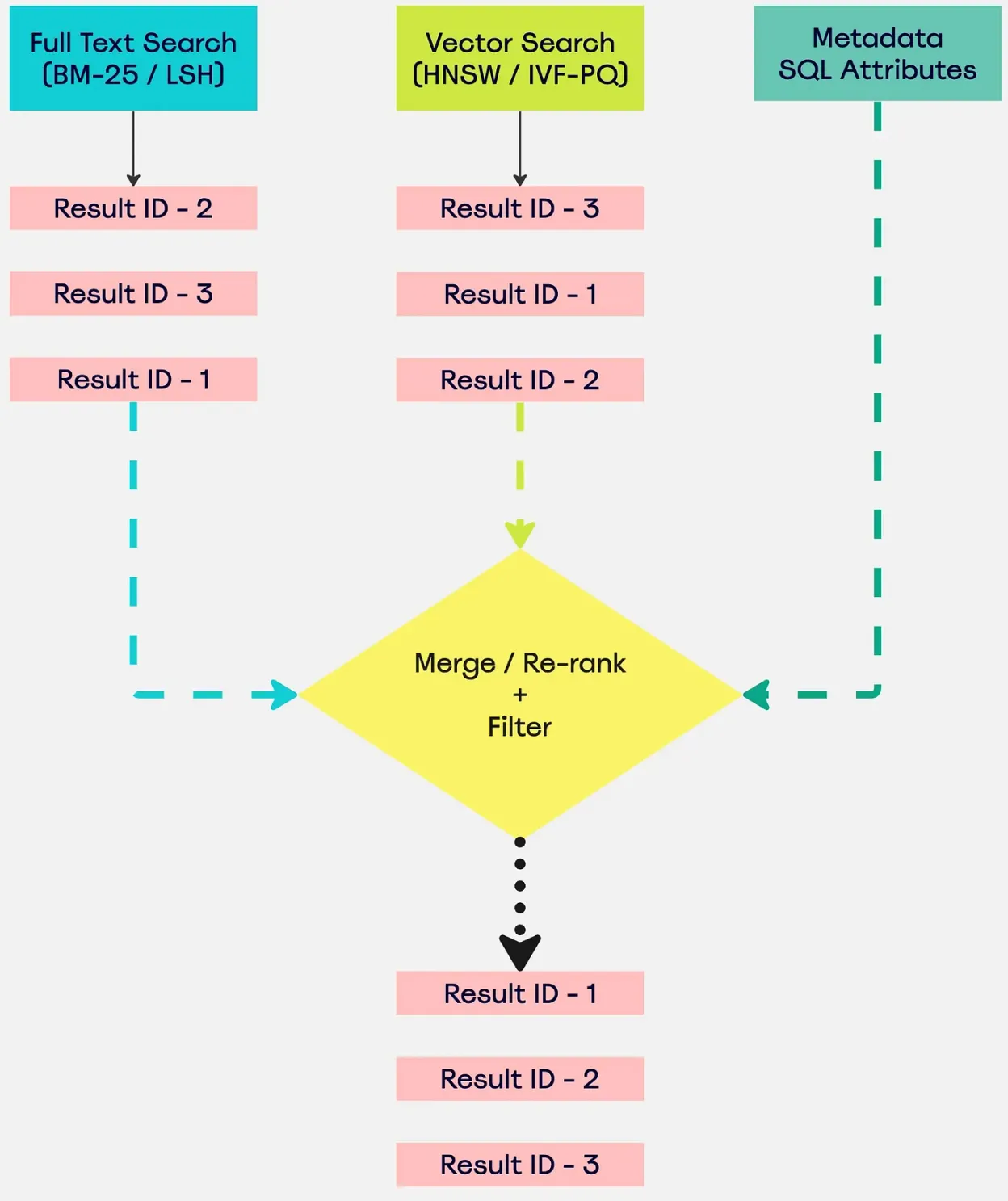

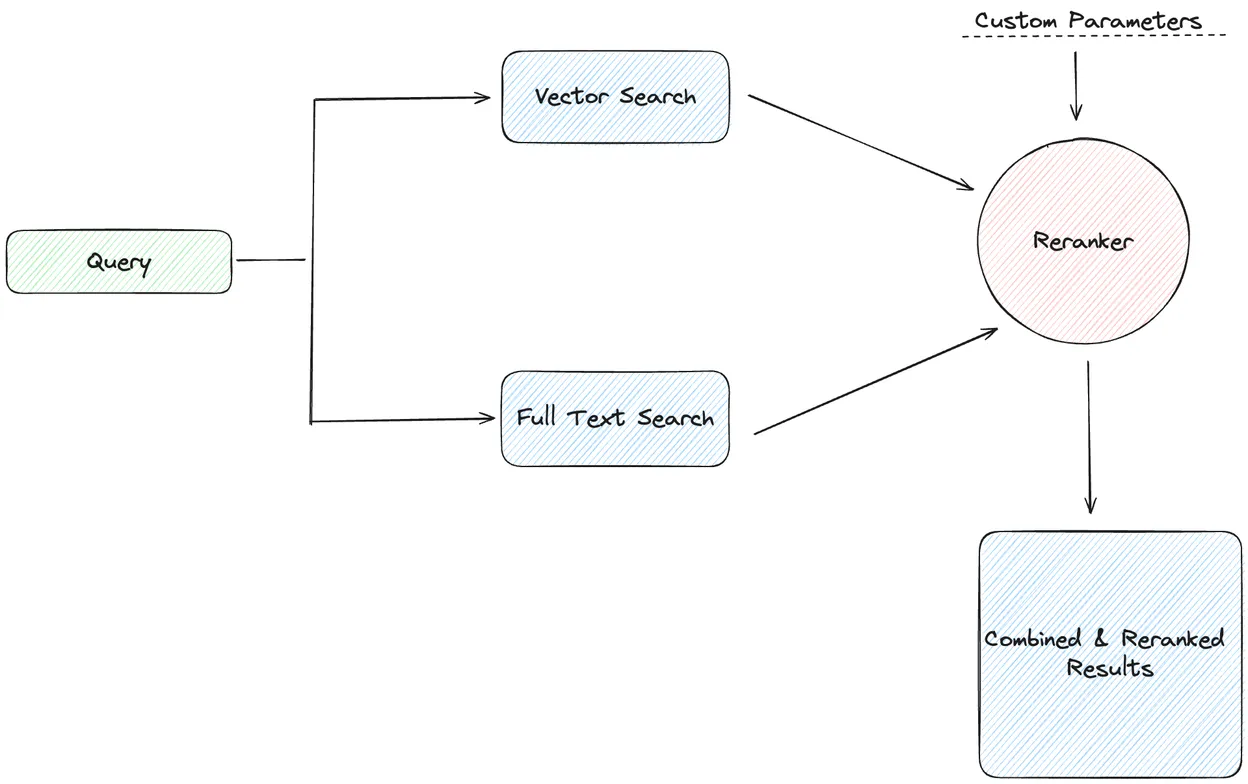

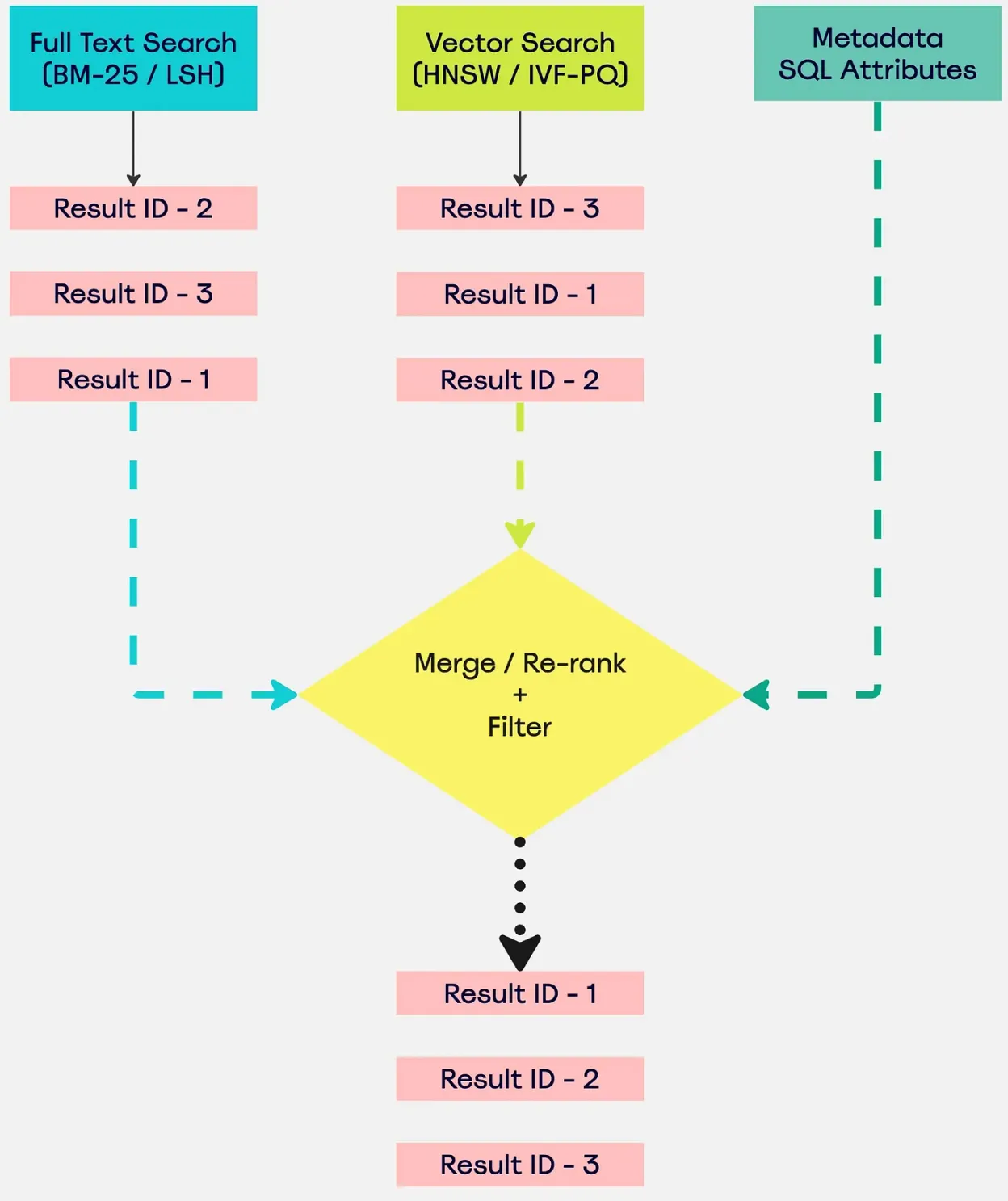

Combine keyword and vector search for higher‑quality results with LanceDB. This post shows how to run hybrid search and compare rerankers (linear combination, Cohere, ColBERT) with code and benchmarks.

Engineering /

Mahesh Deshwal / February 18, 2024

Get about hybrid search: rag for real-life production-grade applications. Get practical steps, examples, and best practices you can use now.

Engineering /

Kaushal Choudhary / January 9, 2024

Discover about efficient rag with compression and filtering. Get practical steps, examples, and best practices you can use now.

Engineering /

LanceDB / December 17, 2023

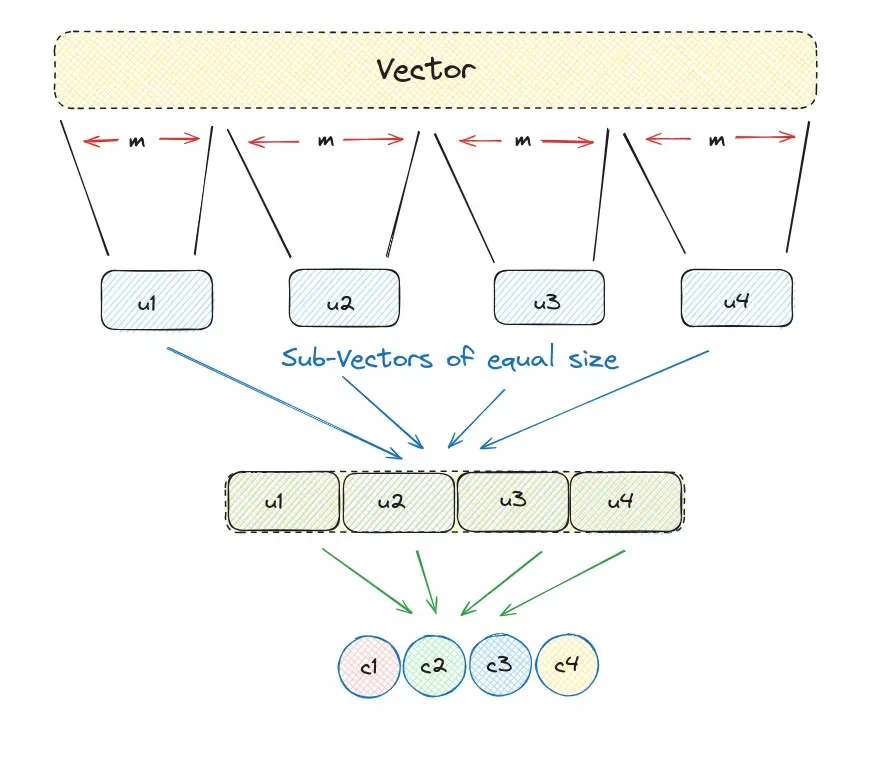

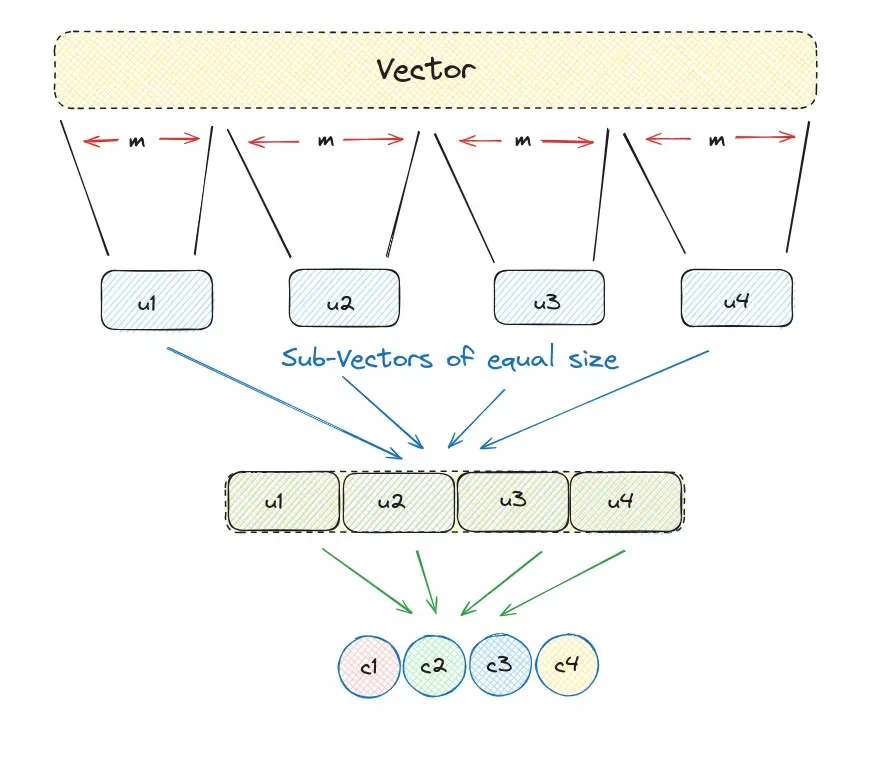

Compress vectors with PQ and accelerate retrieval with IVF_PQ in LanceDB. The tutorial explains the concepts, memory savings, and a minimal implementation with search tuning knobs.

Engineering /

Mahesh Deshwal / December 15, 2023

Get about modified rag: parent document & bigger chunk retriever. Get practical steps, examples, and best practices you can use now.

Engineering /

Kaushal Choudhary / December 12, 2023

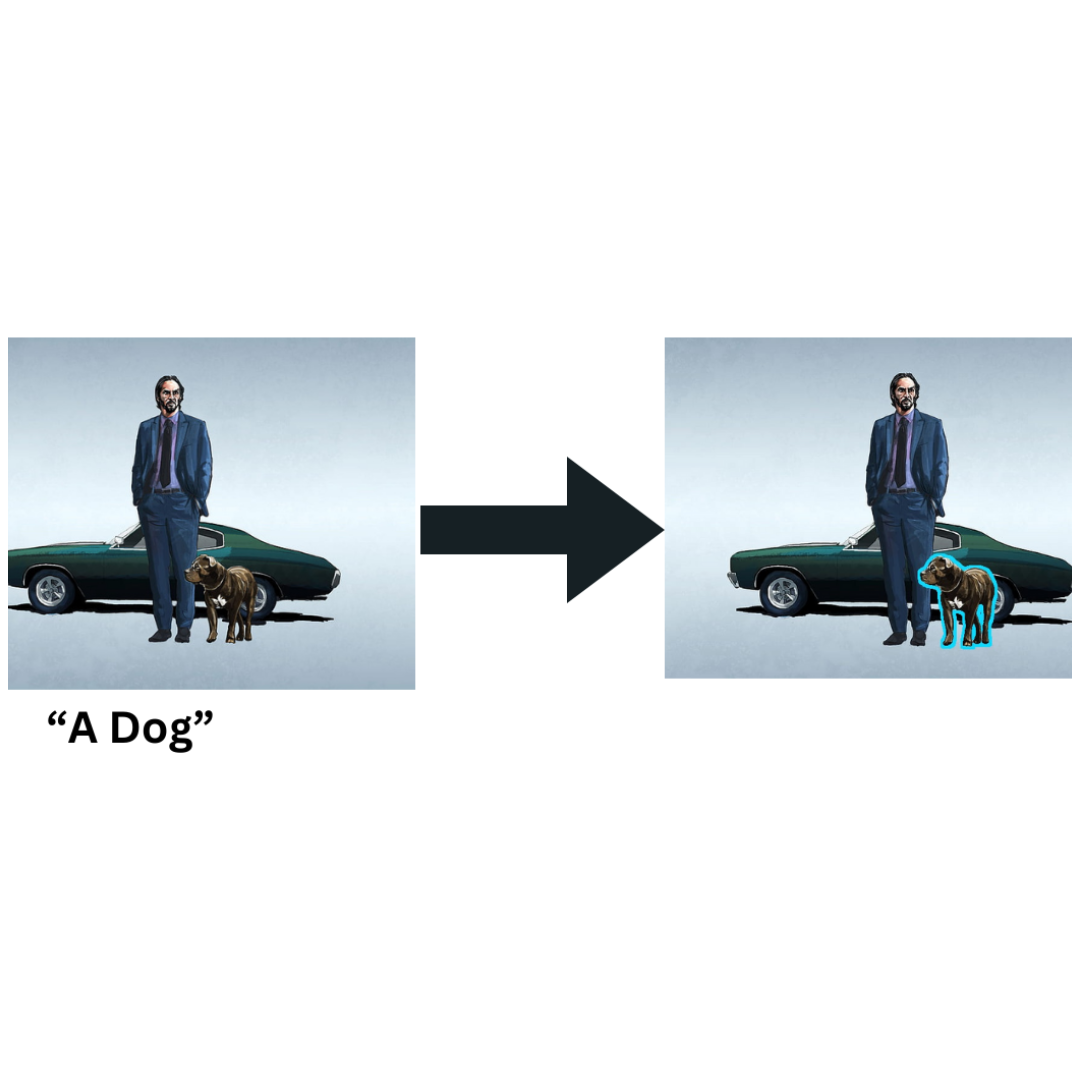

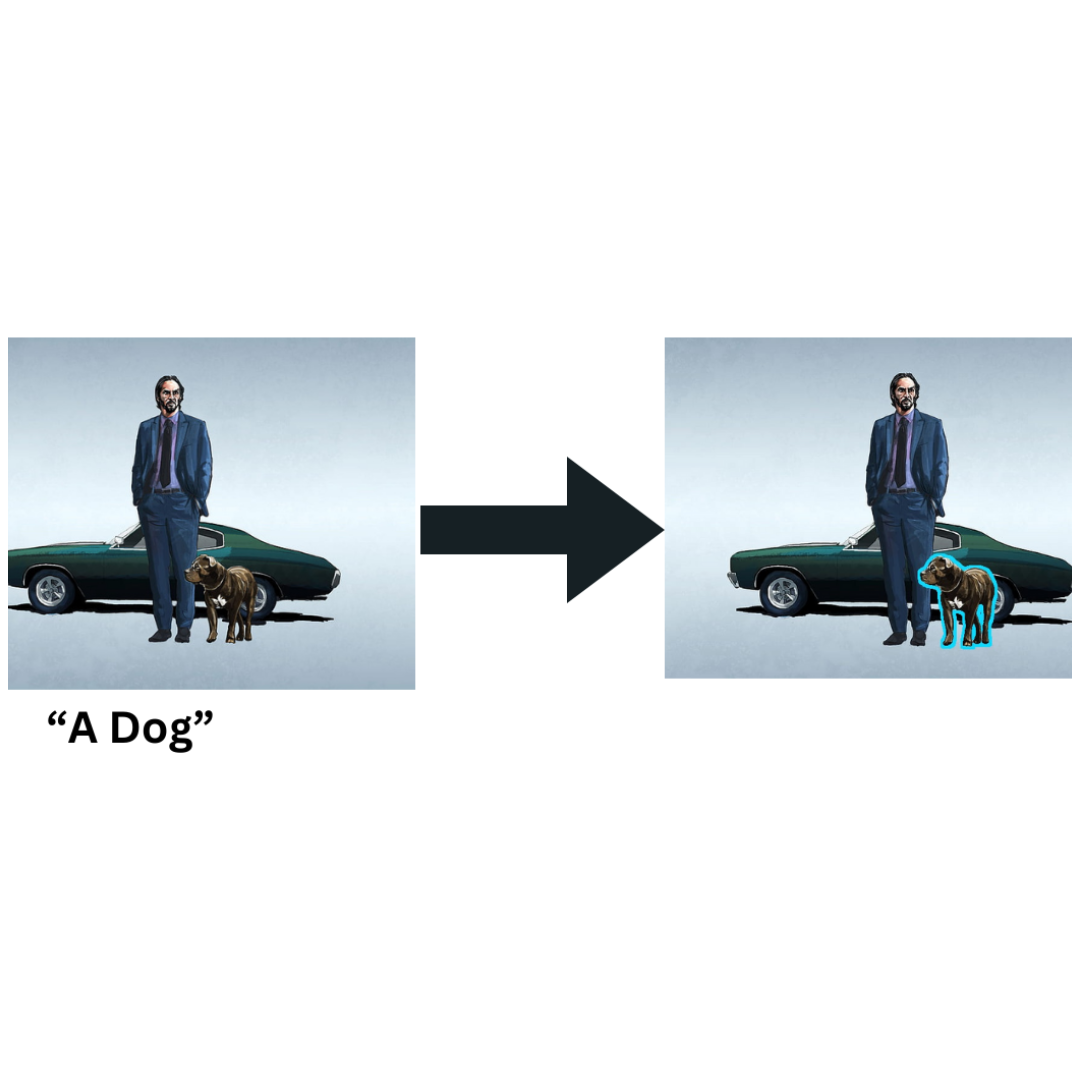

Get about search within an image with segment anything. Get practical steps, examples, and best practices you can use now.